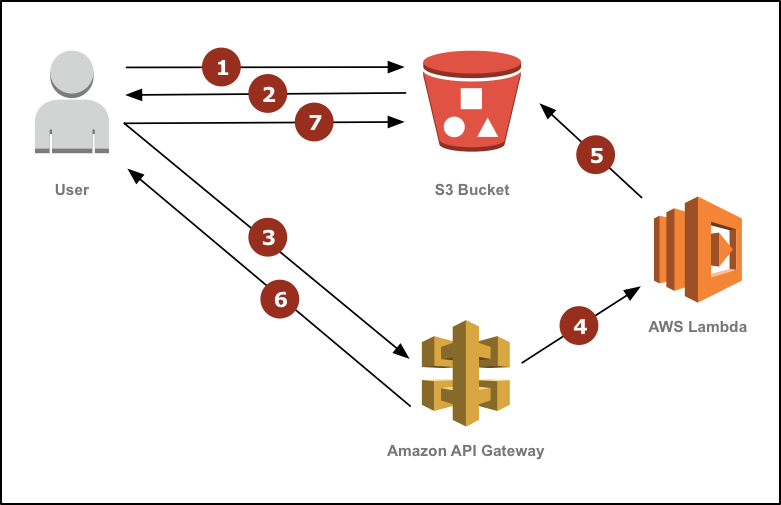

AWS Lambda allows developers to run code without provisioning or managing servers. When combined with Amazon S3’s simple storage service, it provides a scalable way to process files as they are uploaded to S3 buckets. Here are the main steps to set up an automated file processing workflow using Lambda and S3.

Creating the Lambda Function

The first step is to create the Lambda function that will handle file processing. In the Lambda console, give the function a name and description. Select Python 3.8 as the runtime since the example code provided is in Python. For the role, assign it the Lambda role policy so it has permissions to access S3 buckets and objects. Upload your processing code or link to a code repository. Configure the function to trigger on S3 object create events.

Selecting the S3 Bucket

Next, select or create the S3 bucket where files will be uploaded. This bucket should be in the same AWS region as the Lambda function for optimal performance. Make sure the bucket name is unique across all existing bucket names in S3. The bucket must also allow public read access so Lambda has permission to retrieve objects when triggered.

Configuring the Trigger

Go back to the Lambda console and select the configuration tab. Configure a trigger by selecting S3 as the event source. Choose the bucket selected in the previous step from the dropdown. Save the changes so that uploads to this bucket will now trigger the Lambda function.

Setting Access Permissions

The final setup step is to add permissions so Lambda can access objects in the bucket when events occur. Go to the IAM console, select the role assigned to the Lambda function, and attach the AmazonS3FullAccess managed policy. This grants the necessary permissions for Lambda to read S3 objects and process files.

Testing the Workflow

To test the automated workflow, upload a sample file to the S3 bucket. Check the Lambda logs and CloudTrail to verify the function was triggered and ran successfully. TheLambda code can write outputback to S3 for storage or trigger additional processes as needed. With this setup, files uploaded to the S3 bucket will now automatically trigger the Lambda function for processing at scale.

Processing Large Image Files

One common use case is processing large image files uploaded to S3. The Lambda function code could analyze image metadata, generate thumbnails, run image recognition algorithms, or convert formats. For large image files, the function could split processing across multiple asynchronously invoked functions using the file size.

Parsing and Indexing JSON/CSV Data

For unstructured data files like JSON or CSV, the Lambda function might parse records, clean fields, index data to DynamoDB for querying. For large data files, the function code could divide parsing tasks across many temporary function invocations to reduce processing time. DynamoDB streams could then trigger additional processing of indexed records.

Extracting Video Metadata

Video files uploaded to S3 could have metadata like subtitles, keywords, or speech-to-text extracted by Lambda. For long videos, the function could split video processing into segments handled by separate functions to speed up metadata extraction at scale. Metadata could then be stored back in S3 or a database.

Transforming Media Formats

Different file formats like MP4, MOV, JPG could be converted to other desired formats for streaming or website use. Media transcoding is a CPU intensive task well suited to the elasticity of AWS Lambda. For complex format changes, the function could offload segments of reformatting work to many invoked functions working in parallel.

In summary, AWS Lambda and S3 provide developers an easy way to build scalable file processing systems without having to manage servers or worry about capacity planning. The pay-as-you-go pricing model also keeps costs low for intermittent or bursty workloads. With just a few configuration steps, any type of automated file processing or transformation can be set up to run whenever new objects are uploaded to S3.

Exploring Different Ways to Define the Main Method in Java

Exploring Different Ways to Define the Main Method in Java